Media Accessibility

In one way or another I’ve been working on media accessibility for over a decade now – this post is an attempt to collect my thoughts on the subject and provide a useful resource.

Accessibility is not a new word, although some may now spell it a11y (the word accessibility consists of an “a” 11 letters and a “y”). This way of spelling accessibility is linked do the web accessibility movement.

Of course, the concept of accessibility pre-dates the web. For example, the idea that all people should have convenient access to buildings is not a particularly new one.

It's an often overlooked fact that making your media more accessible should benefit everybody, regardless of ability.

Whether it’s buildings or websites, the concept of accessibility is broadly the same – the design and implementation of products, services, environments, and digital content that can be accessed and used by individuals with diverse abilities and disabilities. It aims to eliminate barriers and ensure equal participation, inclusion, and independence for all individuals, regardless of their physical, sensory, cognitive, or technological limitations.

It's an often overlooked fact that making your media more accessible should benefit everybody, regardless of ability.

A note on the use of the word “media” which has many connotations – for the purposes of this article I’m talking predominantly about web-based audiovisual media – in other words the audio and video files we consume on the web in our browsers.

The Rise and Rise of Audiovisual Media

With the advent of more freely available, affordable and higher internet bandwidth (at least in the western world) we’ve seen a significant shift from predominantly written media to various types of audiovisual media. Early web media pioneers include WinAmp and RealPlayer who positioned themselves as niche ways of providing audio and video playback.

These days we’ve seen the huge growth and success of platforms such as Spotify and Netflix, not to mention Instagram and TikTok. And although, of course, people were watching and listening to audiovisual media way before the web was conceived, it feels like the sheer quantity and diversity of media now available is unprecedented.

My background is in web-based media. 14 years ago I co-created jPlayer – a library that allowed web developers to easily and reliably add audio to web pages (video followed soon after).

The jPlayer library was extremely popular, saw millions of downloads and was used by media services such as Pandora.

interactive transcripts facilitate navigation, search and general accessibility

A couple of years later I started to think about the accessibility of media on the web, and that’s when a project called Hyperaudio was born. Hyperaudio is all about linking the spoken word with audio or video on the web – our interactive transcripts facilitate navigation, search and general accessibility. Fast forward a decade (or so) and we're witnessing a growing number of tools that leverage the powerful connection between text and audio.

Making your Media Accessible

Guidelines on how to make media accessible are few and far between. So in this blog post I decided to propose some steps people can take to make audiovisual content more accessible. Let’s take a look at the steps we can take.

Playback Speed

One of the nice things about humans is the way we speak. We all have different accents, vocabularies and pace. It turns out that there is quite a bit of variation in the rate and cadence of speech.

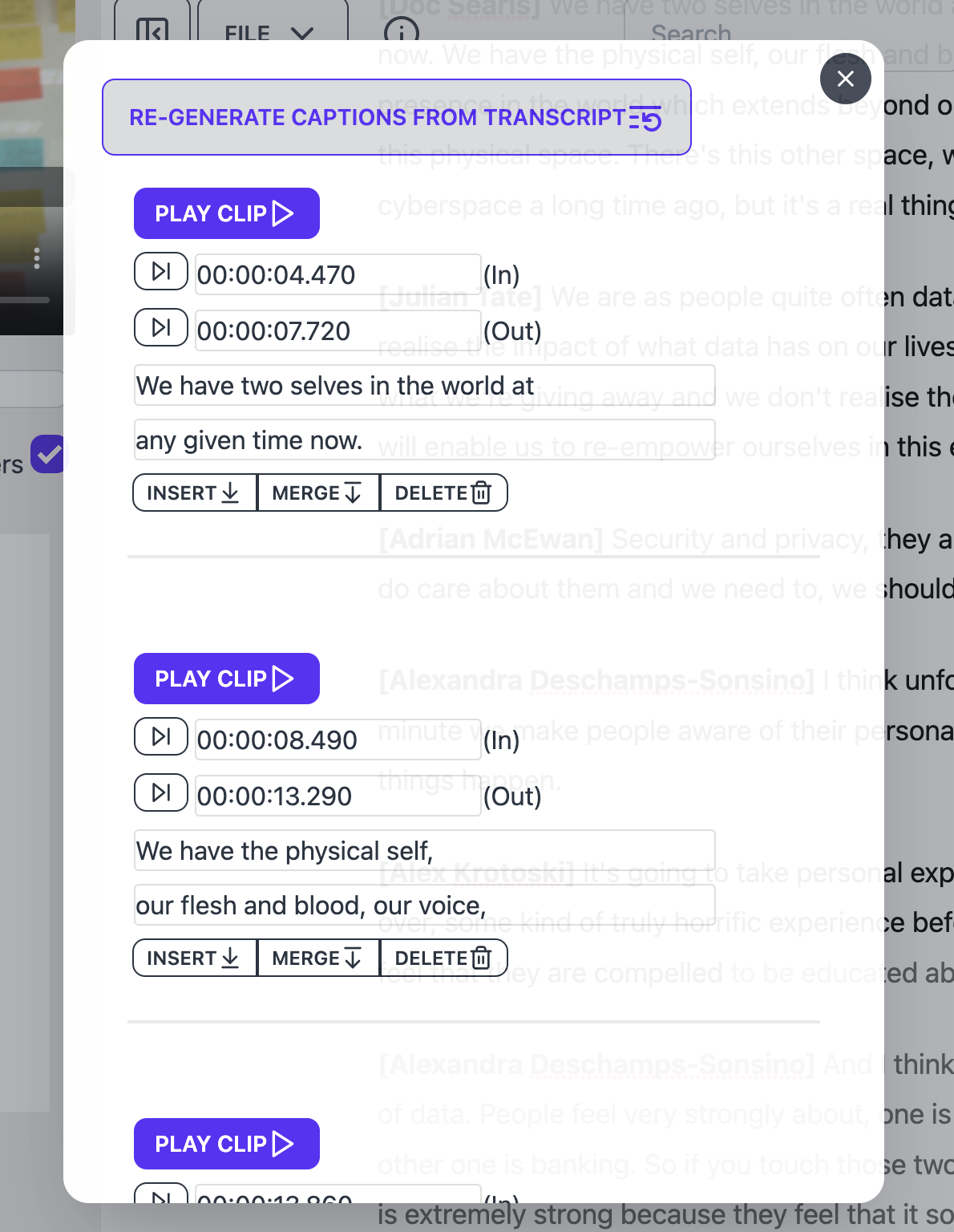

Luckily, if you're developing using web technologies, this is quite a straightforward thing to allow the user to control thanks to the Playback Rate property.

Controlling the rate of playback is useful because it allows people to consume audio at the speed they’re most comfortable with – that might be slower if you’re consuming a language or accent you’re not very familiar with, or faster if you want to be efficient with time.

Generally useful playback rates range from 0.5 (half speed) to a maximum of 3 (triple speed) and increments of no more than 0.25 are advised.

A nice feature of the playback rate property is that no matter which rate you choose, the pitch of the audio always stays the same.

A nice feature of the playback rate property is that no matter which rate you choose, the pitch of the audio always stays the same. Avoiding the chipmunk effect and making sure speech stays as comprehensive as possible.

If the native audio or video player is used there is usually a way to change the playback speed from the player, but these are generally a little hidden and not always available on mobile devices.

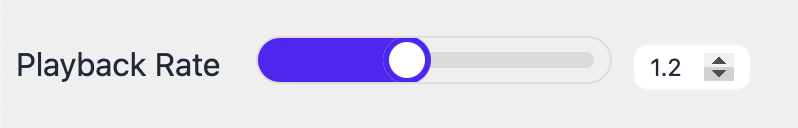

In a recent project I decided to expose the playback speed below the audio/video player and allowed a couple of modes of input – slider with corresponding value shown which can be typed or incremented or decremented.

Fig 1 – Image of the Playback Rate implemetation in the Hyperaudio Lite Editor

Fig 1 – Image of the Playback Rate implemetation in the Hyperaudio Lite Editor

Keyboard Shortcuts

There are a number of common keyboard shortcuts that we can employ to allow users to navigate media from the keyboard, if not already provided by native player (where the spacebar is often a shortcut to play/pause media).

- Play/Pause – Ctrl+Space or Tab

- Rewind – Ctrl+R or ` (back tick)

- Set playback rate – Ctrl+Alt+1 up to 0

Fullscreen and Picture in Picture

It’s often a good idea to let people choose the size of the video they are viewing. Most video players will provide a full screen option and more recently the web-native video element will allow you to toggle Picture in Picture (or PiP) mode. PiP detaches the video from its holder – putting it in its own window which can sit anywhere on the screen, even outside the browser while allowing the viewer to set the size.

PiP detaches the video from its holder – putting it in its own window which can sit anywhere on the screen, even outside the browser

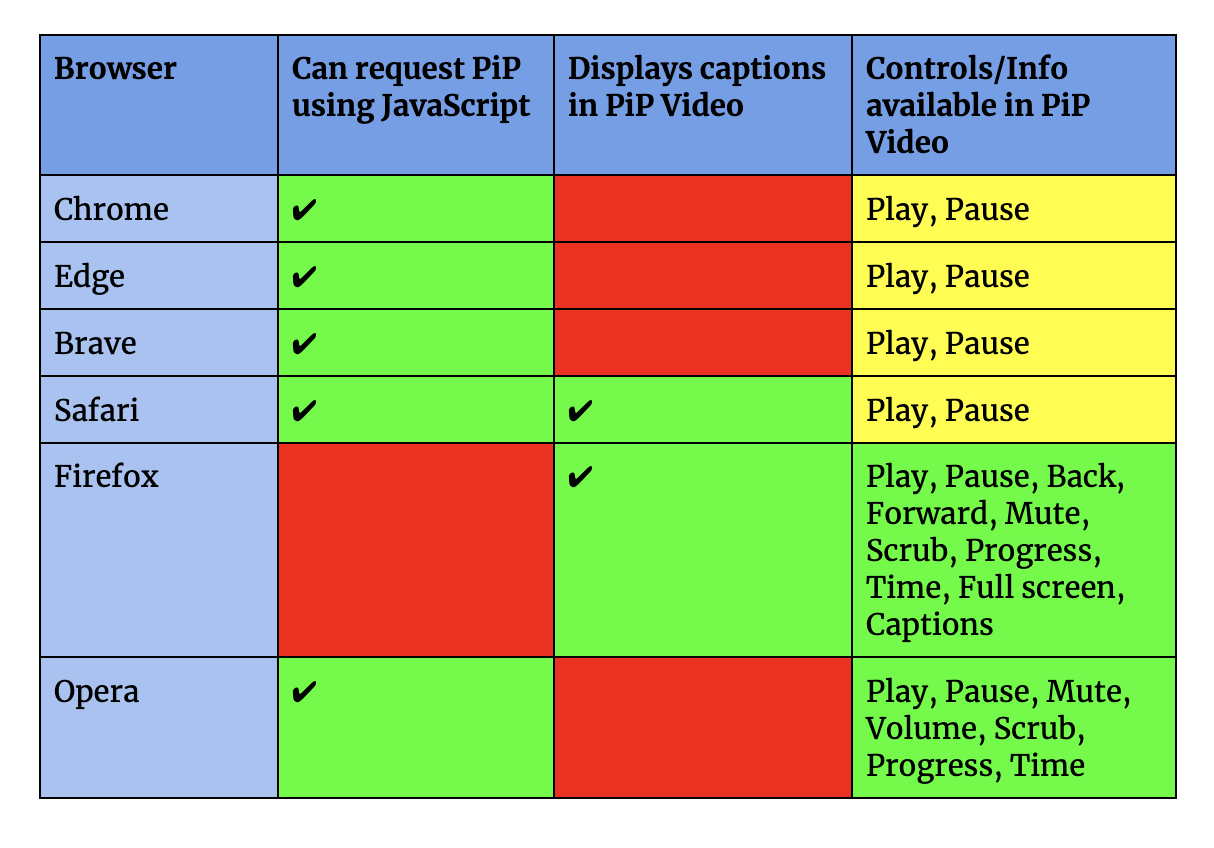

PiP can be toggled on and off in most browsers but since it is a fairly new feature, there can be limitations to what you can do with it and differences between browser implementations.

For example in Firefox, PiP can only be triggered from the native video player, because the requestPictureInPicture() method is currently unsupported.

One aspect I do like about PiP on Firefox is that it displays captions inside the detached PiP video, as does Safari, whereas other Blink based browsers (Chrome, Edge, Opera and Brave) currently display captions in the original video “holder”.

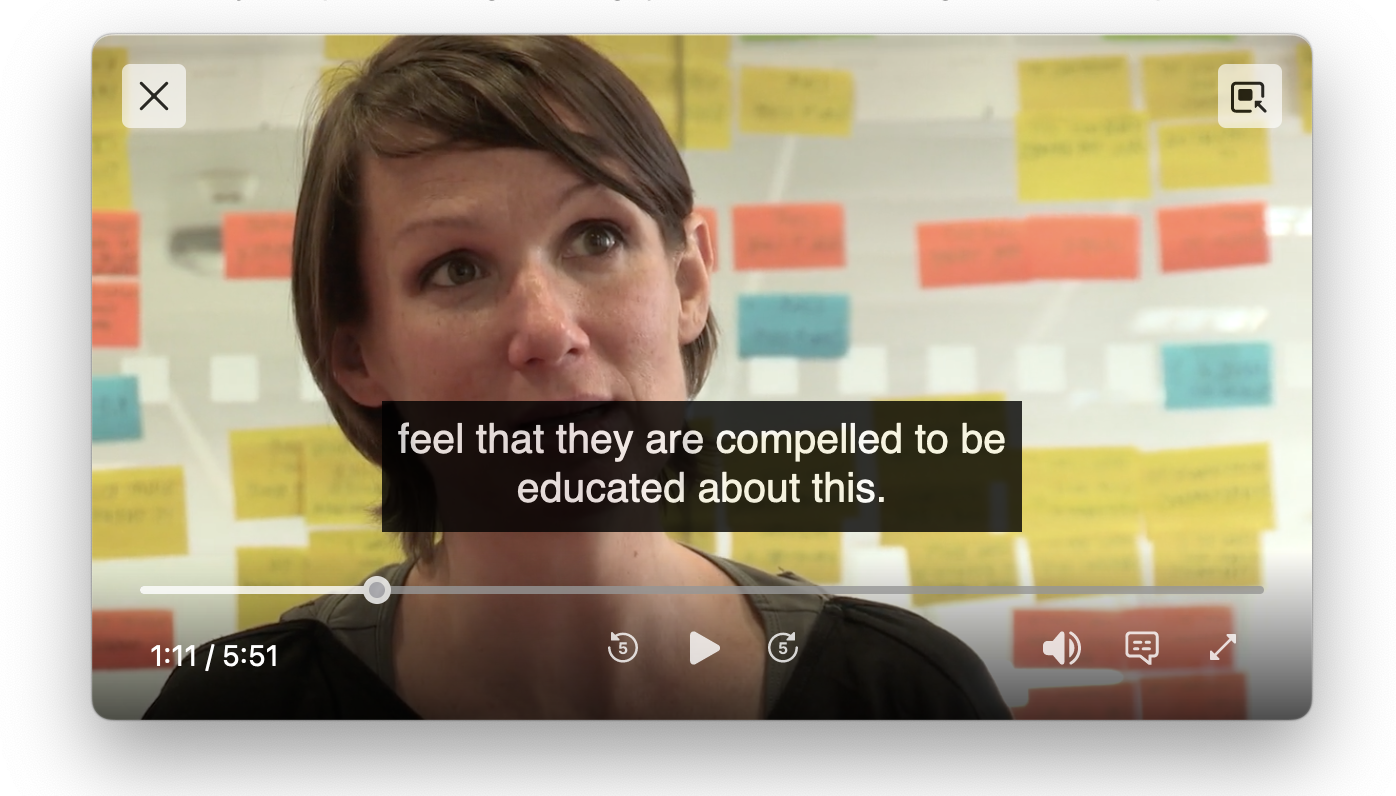

Fig 3 – Detached Picture in Picture in Firefox 115

Fig 3 – Detached Picture in Picture in Firefox 115

Note however that sometimes a subset of controls are available in the PiP video player, Firefox provides the best level of control, while Chrome and Safari essentially just provide play and pause.

Fig 4 – A chart showing PiP browser compatibility

Fig 4 – A chart showing PiP browser compatibility

Adaptive Streaming

Most streaming services take advantage of adaptive streaming as a way to provide the best experience for various bandwidths. Adaptive streaming works by selecting from a number of streams depending on bandwidth detected. Usually this means that the higher the bandwidth, the higher the quality of the stream, where quality usually refers to the resolution of the video, but could include other variations such as audio quality.

The idea is that should your bandwidth drop below a certain level, a suitable stream is picked so that playback is uninterrupted.

Most services (YouTube, Vimeo etc) will adaptively stream by default. If you want to take more control over that process you can use services such as Mux.com or even roll your own MPEG-DASH (Dynamic Adaptive Streaming over HTTP) or HLS (HTTP Live Streaming) based solutions following the guides available on MDN.

Note that support for HLS and DASH varies and so you may need to include HLS.js or Dash.js JavaScript libraries to ensure compatibility.

in many parts of the world, bandwidth can be charged per amount used and so it makes sense to let users choose the quality stream they consume

Up to now we’ve talked about delivering the best quality media that bandwidth allows, but I think there’s another important aspect for accessibility – in many parts of the world, bandwidth can be charged per amount used and so it makes sense to let users choose the quality stream they consume, as high bandwidth streams may be prohibitively expensive.

Thumbnails

One of the challenges of using audio and video is the efficient navigation of content. Modern streaming platforms usually provide a way to quickly scan content by displaying a small still image of a frame (often known as a thumbnail) at any given point, usually navigated by hovering, dragging on desktop and mobile or scrolling using a TV remote on smart TVs.

Thumbnail functionality is not built into native browser video players, so you’ll either have to create your own or use one of the popular video player libraries or services.

Waveforms

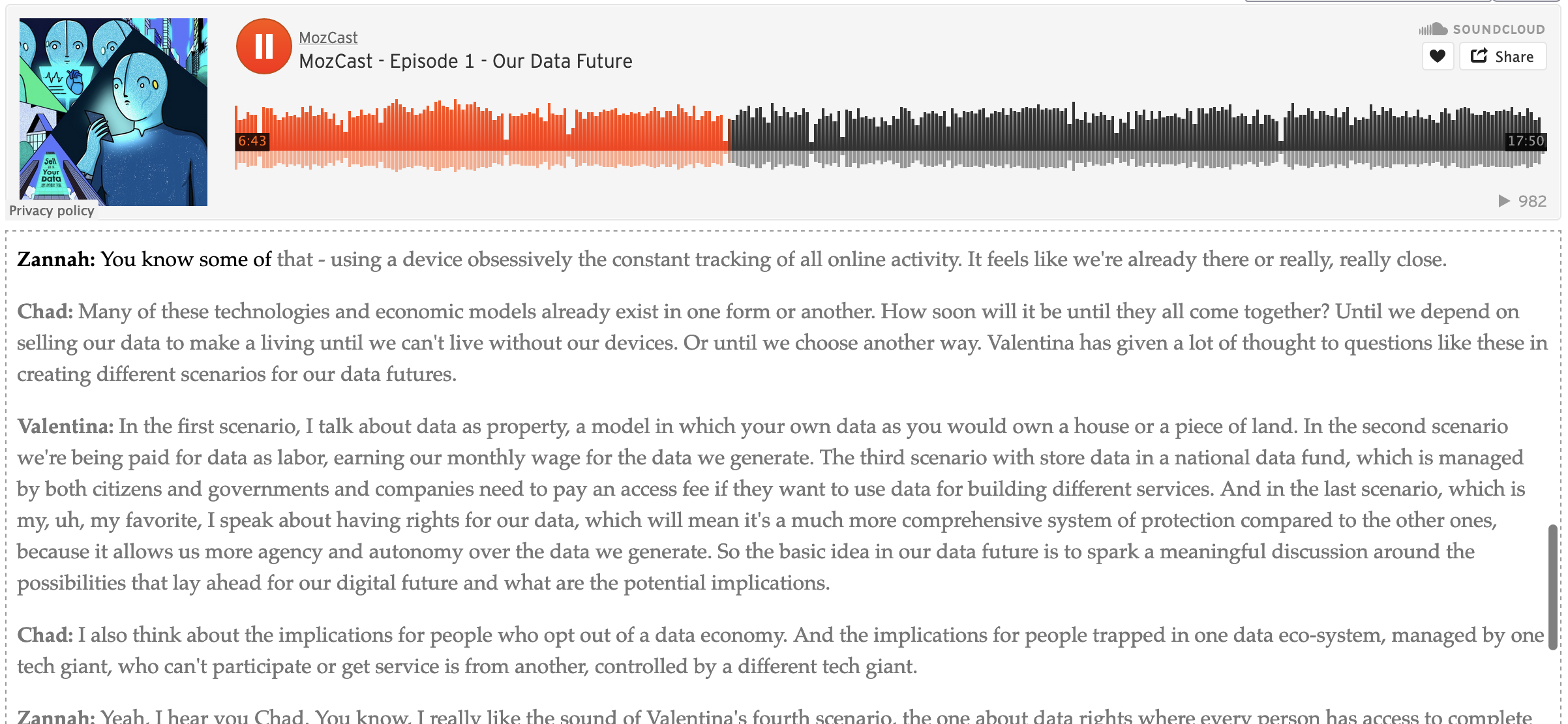

Waveforms are a visual representation of the audio track, most useful for audio content such as podcasts, it’s not easily possible to establish content from a waveform but it does indicate silent or lower volume sections of audio. Waveforms are not provided natively within browser audio players and few off-the-shelf players provide this functionality by default.

Fig 5 – The Hyperaudio Lite Library being used with Soundcloud

Fig 5 – The Hyperaudio Lite Library being used with Soundcloud

Captions / Subtitles

Providing captions (sometimes known as subtitles) is probably the most common technique employed to make video more accessible. Captions have been around for a long time and were often burned into video when making it accessible to people who didn’t necessarily speak the original language of the content, although that application is predated by captions for silent films.

Captions generally represent dialog but can also describe other sounds and activity in a video. They are usually positioned at the bottom of the screen, but some captions vary the positioning to suit the underlying imagery.

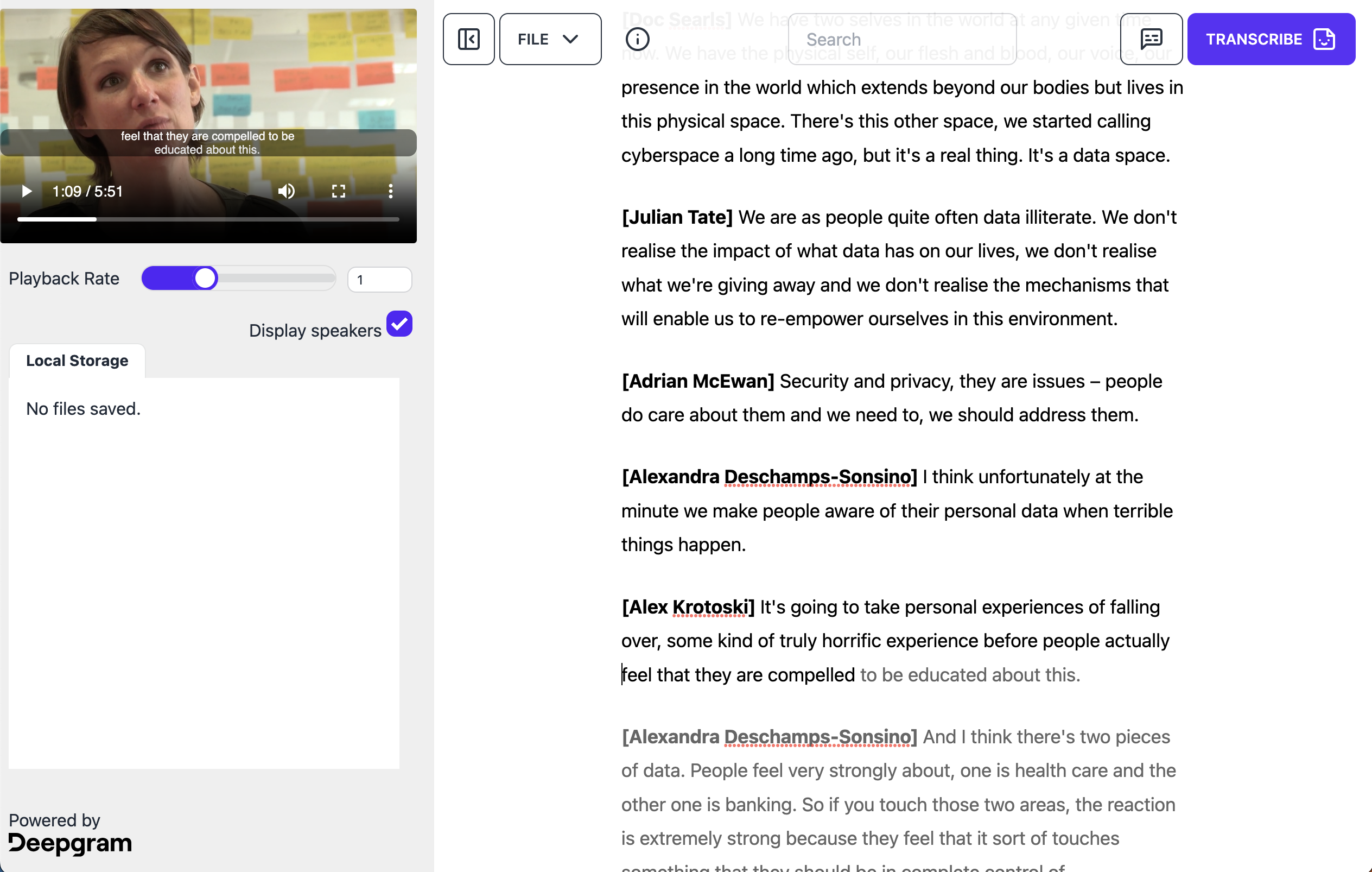

It’s worth noting that while a transcript is usually quite an accurate representation of the spoken word, captions tend to deviate more, so once you’ve generated them it’s important to be able to edit them.

More recently we have seen the introduction of captions, that exist separately to the media they describe, allowing captions to be switched on and off, varied in size and different languages and styles of captioning to be chosen. Colours are often used to denote speakers and most major broadcasters have guidelines around creating captions, specifying length of captions, where to break and other aspects.

Fig 6 – The Hyperaudio Lite Editor's caption editing functionality

Fig 6 – The Hyperaudio Lite Editor's caption editing functionality

Services like YouTube will generate captions automatically, but these can vary in quality. Captioning workflows do exist to create accurate captions and it’s worth looking at services like Amara should you wish to do this yourself. Standard formats exist meaning captions can be added to most media and services. On the web a standard called WebVTT is generally used, a format inspired by SRT used in players such as VLC.

Captions are extremely useful for people with hearing or learning difficulties, but as is often the case improving accessibility can benefit all. How many of us consume video on social media with the volume muted?

Transcripts

And so on to an area of accessibility close to my heart – transcripts. As a transcript is usually much easier to scan than a bare audio or video file, transcripts can help with the comprehension of audiovisual media by giving you a good idea of spoken word content.

a transcript is usually much easier to scan than a bare audio or video file

Interactive Transcripts are right at the core of the Hyperaudio Project. When I started working with transcripts more than a decade ago, Automatic Speech Recognition (ASR) was expensive, slow and extremely unreliable. In fact it was still largely unreliable five years later, when I co-founded Trint to create a tool to address this issue, allowing text correction while maintaining timings.

Fig 7 – The Hyperaudio Lite Editor's interactive transcript view.

Fig 7 – The Hyperaudio Lite Editor's interactive transcript view.

Using word timing data (a by-product of ASR) and web technology to make transcripts interactive we make it easier for content to be navigated (by clicking on words), shared (by selecting passages of text) and even remixed.

Initially we created a suite of tools within Hyperaudio to allow you to align pre-typed transcripts with the audio track. These days we can get great results in a number of languages using open source tools and inexpensive services.

Interactive transcript data is also useful as it can be used to automatically generate captions, timings being key.

Interactive transcript data is also useful as it can be used to automatically generate captions, timings being key. To this end I’ve spent quite a bit of time recently creating tools that help with the creation of captions. It’s worth noting that while a transcript is usually quite an accurate representation of the spoken word, captions tend to deviate more, so once you’ve generated them it’s important to be able to edit them.

Finally whether captions or transcripts, you can make your media even more accessible by translating content into different languages. This is something we looked into for the Hyperaudio for Conferences platform you can read more about that in this post.

If you want to make your media more accessible by adding transcripts and captions please feel free to use a free and open source tool I’ve been working on called the Hyperaudio Lite Editor, it uses a service called Deepgram to convert speech to text taking advantage of the latest and greatest ASR such as Whisper. (Deepgram are currently offering 45,000 minutes of transcription for free).

You may also be interested in the OpenEditor which integrates with Amazon Transcribe and can be hosted on an AWS instance of your choice. It includes a transcript manager and search functionality.

With these free tools you can create, correct and convert transcripts to captions and export them as interactive transcripts. These transcripts can be used together with the Hyperaudio Lite Wordpress plugin or if you're a developer you can integrate into any platform using Hyperaudio Lite JavaScript library.

A quick thank you to WWCI and WTTW for the sponsorship of the development of the Hyperaudio Lite Wordpress plugin and OpenEditor.

Conclusion

Media accessibility plays a crucial role in ensuring that individuals with varying capabilities can fully engage with audiovisual content on the web. By implementing certain features, we can enhance the accessibility of media and make it easier for a wider range of people to access and enjoy.

Playback speed control allows users to consume audio at their preferred pace, accommodating different accents, vocabularies, and personal preferences. Fullscreen and Picture in Picture (PiP) options provide flexibility in adjusting the size and placement of video content, improving the viewing experience.

Adaptive streaming ensures seamless playback by dynamically adjusting the quality of the stream based on the user's bandwidth. This not only enhances the overall experience but also allows users in areas with limited bandwidth or high data costs to choose lower quality streams to manage their usage effectively.

Thumbnail navigation and waveforms aid in efficient content scanning and identification of silent or low-volume sections in audio tracks, respectively. Captions/subtitles, an essential accessibility feature, benefit individuals with hearing difficulties and also provide an option for muted video consumption.

Transcripts, particularly interactive transcripts, play a significant role in improving comprehension and navigation of audiovisual media. They allow users to click on words for quick navigation, select and share specific passages, and even facilitate remixing of content. Transcripts also serve as valuable data for generating accurate captions.

While guidelines on media accessibility are limited, implementing these steps and features discussed in this post can contribute to a more inclusive media experience. By making media accessible, we not only cater to individuals with specific accessibility needs but also create an environment that is more user-friendly and convenient for everyone.

As technology continues to advance, it is crucial that we prioritize media accessibility and strive to make it an integral part of content creation and consumption. By embracing these practices and utilising tools like the Hyperaudio Lite Editor, we can make significant strides towards a more accessible and inclusive digital landscape. Let's work together to ensure that audiovisual media is accessible to all individuals, regardless of their abilities or limitations.